If you are administering a very large SQL Server database that uses integer IDs, you may have cause to be concerned about how many identity values you have left for your tables.

This is a particular concern for heavy transactional tables where new rows are constantly being inserted and there are other reasons why identity values can soar which I will cover further below.

In this article, I am going to start off by discussing identity columns and the possible reasons for having large identity values in your database tables. I will then provide a simple SQL query that allows you to see how many IDs are remaining for each table in your database.

Identity columns

Identity columns in SQL Server are used to hold automatically generated key values that typically serve as the primary key for a database table.

Usually, either unique identifiers (GUIDs) or integers are the data type of choice for identity columns.

In the sections that follow, I will be focusing on integer data types in SQL Server.

Integer data types

SQL Server features TINYINT, SMALLINT, INT, and BIGINT integer data types.

The most common data type choice for an ID column in a database is INT which represents a 32-bit integer value.

Some developers prefer to use the smallest possible data type based on the maximum number of rows that a database table is expected to contain. This approach saves space in the database and can be quite a safe approach for certain scenarios. For example, imagine a table that is intended to contain customer types e.g. Individual, Business, Charity etc. In this case, it might be quite reasonable to assume that there would be less than 255 types of customer (255 is the maximum value for a TINYINT).

Another common approach is to use the INT data type for all database table identity columns. This approach removes the decision-making process and helps to guard against most of the concerns with database tables growing beyond original expectations. However, it is not foolproof either as you’ll see in a moment.

Running out of values

Even though the maximum value of the INT data type is 2,147,483,647 there are still cases where this may not be enough.

Imagine a heavily used transactional table or an audit table where new rows are continually being pumped in. Over a period of months or years, the maximum INT value can be exceeded. This scenario is even more likely in a multi-tenant environment where the same database is shared by different tenants.

Failed inserts can cause identity values to be wasted. Every time a row insert fails in a table containing an identity column, the identity value will be increased regardless. If there is an application defect that repeatedly fails to insert a row (e.g. in an infinite loop), or there is a rogue script or scheduled task that has gone out of control this scenario can cause you to run out of IDs much faster than anticipated.

Another possible scenario is complications with SQL Server Merge Replication. In rare cases, a misconfigured replication setup can cause new identity ranges to be repeatedly issued, increasing the current identity values at a greatly accelerated pace.

The need to monitor

Whatever the reason for large volumes of data, and thereby the associated increase in the number of IDs used in a table, it is very useful to have a way of reporting on the current identity situation within your database.

The simplest way to monitor identity values is via a SQL query.

Identity monitoring

Let’s look at how to monitor how many IDs are left for your database tables.

The Query

The following simple query can be executed against your database to help you report on identity value statistics.

SELECT TABLE_NAME AS [Table Name], IDENT_CURRENT(TABLE_NAME) AS [Current Identity], 2147483647 - IDENT_CURRENT(TABLE_NAME) AS [IDs Remaining], (IDENT_CURRENT(TABLE_NAME) / (2147483647 - IDENT_CURRENT(TABLE_NAME))) * 100 AS [Percentage Used] FROM INFORMATION_SCHEMA.TABLES WHERE OBJECTPROPERTY(OBJECT_ID(TABLE_NAME), 'TableHasIdentity') = 1 AND TABLE_TYPE = 'BASE TABLE' ORDER BY [Current Identity] DESC

The above SQL selects and calculates all of the data to display from the INFORMATION_SCHEMA.TABLES system table.

The IDENT_CURRENT function returns the current identity value of the table with the specified name.

Note the use of the value 2147483647 which is the largest possible value for the INT data type in SQL Server. This value is used to calculate the number of IDs remaining, as well as the percentage of IDs that have already been used for a table.

The WHERE clause uses the OBJECTPROPERTY and OBJECT_ID functions to filter the results to only tables which have an identity column.

The TABLE_TYPE is restricted to ‘BASE TABLE’ which prevents rows with other table types such as ‘VIEW’ from being returned.

Lastly, the results are ordered by the largest identity value in a descending fashion.

The Results

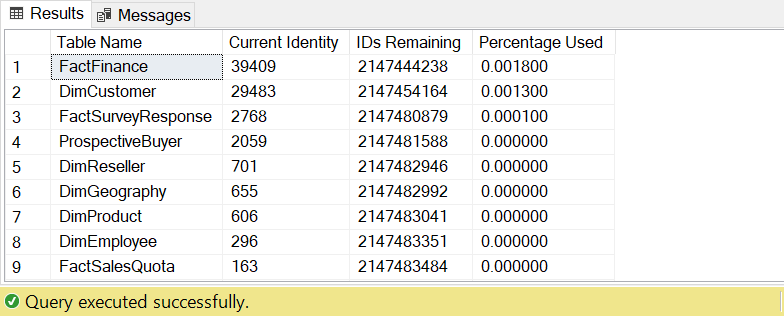

After running the query shown in the previous section, you will see a result set similar to the following screenshot.

The above screenshot shows the results displayed whenever the query is executed against the standard AdventureWorks database. In a larger database, you’ll see much higher identity values and percentage used statistics.

The results are helpfully ordered by the ‘Current Identity’ value, drawing your attention to tables with identity values that are closest to the maximum INT value.

The ‘Percentage Used’ column provides a quick visual indication of how close the identity value of a specific table is to the maximum.

Solutions

Before concluding this article I would like to briefly mention some of the possible solutions to the problem of large identity values.

Change data type

If you don’t mind your table growing even larger, and your application data layer can be easily updated to use a different data type for your ID columns, the simplest solution may be to simply alter your schema to use a data type that supports larger values.

ALTER TABLE DimCustomer ALTER COLUMN CustomerKey BIGINT NOT NULL

Note that you should be aware of potential issues when running something like the above SQL statement on a large database table e.g. table locking, log file growth, and associated keys and indexes that may need to be dropped and recreated.

The above SQL updates the identity column to a BIGINT which has a maximum value of 9,223,372,036,854,775,807!

Reseed

If your IDs start with a value of 1 you could potentially RESEED your table to start with a minus value i.e. -2147483648 instead.

DBCC CHECKIDENT('DimCustomer', RESEED, -2147483648)

Any rows added to the table after executing the above SQL will start off with the specified minus value and increase by 1 as usual, so you’ll not run out of IDs again until you reach zero.

I don’t like this approach as it looks ugly and quite often application code contains checks to make sure that an ID value is greater than zero, for example, to determine if a record is new or is being edited. Therefore, this may not be a workable solution for some situations.

Bulk copy

If your database table is a base-level table, where its identity value is not referenced by other tables, another possible solution is to create a new table and use the bcp Utility to bulk copy data from the table that is running out of IDs.

This can be a workable solution if there are significant gaps in between your identity values. For example, as in the case of the replication issue that I mentioned near the beginning of the article.

I plan to cover how to automate this approach in a future article.

Summary

In summary, I have covered what identity columns are in SQL Server and why you might run into problems with large identity values in your database.

I have demonstrated how to report on current identity statistics using a SQL query to see how many IDs are remaining for each table in your database.

Lastly, I have briefly discussed some ideas regarding how to resolve problems with large identity values.

Comments